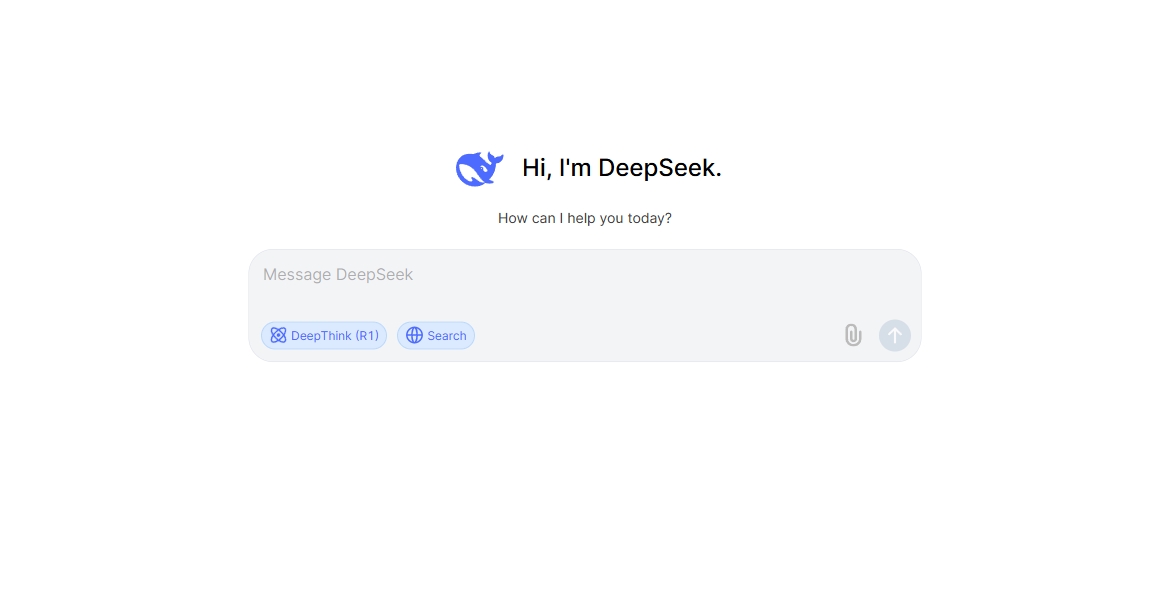

How to Run DeepSeek Locally: A Complete Setup Guide for AI Enthusiasts

Artificial Intelligence (AI) is rapidly evolving, and models like DeepSeek are becoming widely used for text generation, coding assistance, and research. Running DeepSeek locally offers multiple advantages, including privacy, lower latency, and full control over the AI model.

However, installing and running DeepSeek AI locally requires some technical setup. This guide provides a step-by-step method to install DeepSeek on your computer using Ollama, a tool designed to run AI models efficiently on local hardware.

Part 1: System Requirements for Running DeepSeek Locally

Before installing DeepSeek, you need to ensure your system meets the minimum hardware and software requirements.

Minimum Hardware Requirements:

CPU: Multi-core processor (Intel i5/Ryzen 5 or better).

RAM:

- 8GB+ for the 5B model.

- 16GB+ for the 8B

- 32GB+ for the 14B+

Storage: At least 20GB of free disk space (varies by model size).

GPU (Optional but Recommended): NVIDIA RTX 3060 or better for large models.

Supported Operating Systems:

✅ Windows 10/11 (WSL recommended for better performance).

✅ macOS (M1/M2/M3 or Intel).

✅ Linux (Ubuntu 20.04 or later recommended).

Part 2: Installing Ollama to Run DeepSeek

Ollama is a lightweight tool that simplifies running AI models locally. Here’s how to install it on different operating systems.

Installing Ollama on macOS

- Open Terminal.

- Run the following command : brew install ollama

- Verify the installation by running: ollama --version

Installing Ollama on Windows

- Download Ollama from the official website.

- Run the installer and follow the on-screen instructions.

- Open Command Prompt (cmd) and type: ollama --version

- If the version number appears, Ollama is successfully installed.

Installing Ollama on Linux (Ubuntu/Debian-based)

- Open Terminal.

- Run the following command : curl -fsSL https://ollama.com/install.sh | sh

- Confirm the installation: ollama --version

Part 3: Downloading and Setting Up DeepSeek R1

Once Ollama is installed, the next step is to download and set up DeepSeek R1.

Choosing the Right DeepSeek Model

DeepSeek offers multiple versions depending on system capabilities:

Model

RAM Requirement

Best For

DeepSeek R1 1.5B

8GB+

Light AI tasks

DeepSeek R1 8B

16GB+

General text generation, coding

DeepSeek R1 14B

32GB+

Complex problem-solving, research

DeepSeek R1 32B+

64GB+

Advanced AI applications

Downloading DeepSeek Model with Ollama

To install DeepSeek on your local machine, open Terminal (macOS/Linux) or Command Prompt (Windows) and run:

ollama pull deepseek-r1:8b

Replace 8b with your desired model version, such as 1.5b or 14b. The download size varies, so ensure you have sufficient disk space.

Part 4: Running DeepSeek Locally

After downloading the model, you can start DeepSeek with the following command: ollama run deepseek-r1:8b

Testing DeepSeek with a Basic Prompt

Try running this to verify the model: echo "What is the capital of France?"| ollama run deepseek-r1:8b

If DeepSeek responds correctly, the setup is successful!

Part 5: Optimizing DeepSeek for Better Performance

If DeepSeek runs slowly or lags, try these optimizations:

✅ Increase CPU Threads

By default, Ollama assigns limited CPU threads. To increase performance, run: ollama run deepseek-r1:8b --num-threads=4

Replace 4 with the number of available CPU cores.

✅ Use GPU Acceleration (If Available)

For NVIDIA GPUs, enable CUDA support: ollama run deepseek-r1:8b --use-gpu

This significantly improves performance for larger models.

✅ Reduce Response Latency

Use the max-response-tokens flag to limit response length and speed up output: ollama run deepseek-r1:8b --max-response-tokens=100

Part 6: Troubleshooting Common Issues

If you encounter errors, try these solutions:

❌ Error: "Ollama not recognized" (Windows)

✅ Restart your system after installing Ollama.

✅ Ensure Ollama is added to system PATH variables.

❌ Error: "Insufficient Memory"

✅ Close unnecessary applications to free up RAM.

✅ Use a smaller model (e.g., switch from 14B to 8B).

❌ Error: "CUDA device not found"

✅ Ensure you have installed NVIDIA CUDA drivers.

✅ Run nvidia-smi in the terminal to check GPU status.

Conclusion

Running DeepSeek locally provides privacy, efficiency, and better control over AI processing. By following this guide, you can set up and optimize DeepSeek on your machine, ensuring smooth AI-assisted tasks for coding, writing, and research.

If you found this guide useful, let us know in the comments!

HitPaw Univd (Video Converter)

HitPaw Univd (Video Converter) HitPaw VikPea (Video Enhancer)

HitPaw VikPea (Video Enhancer) HitPaw Edimakor

HitPaw Edimakor

Share this article:

Select the product rating:

Daniel Walker

Editor-in-Chief

This post was written by Editor Daniel Walker whose passion lies in bridging the gap between cutting-edge technology and everyday creativity. The content he created inspires the audience to embrace digital tools confidently.

View all ArticlesLeave a Comment

Create your review for HitPaw articles